NLP from scratch

最近实习在学NLP,总结一下综述性的内容吧

介绍

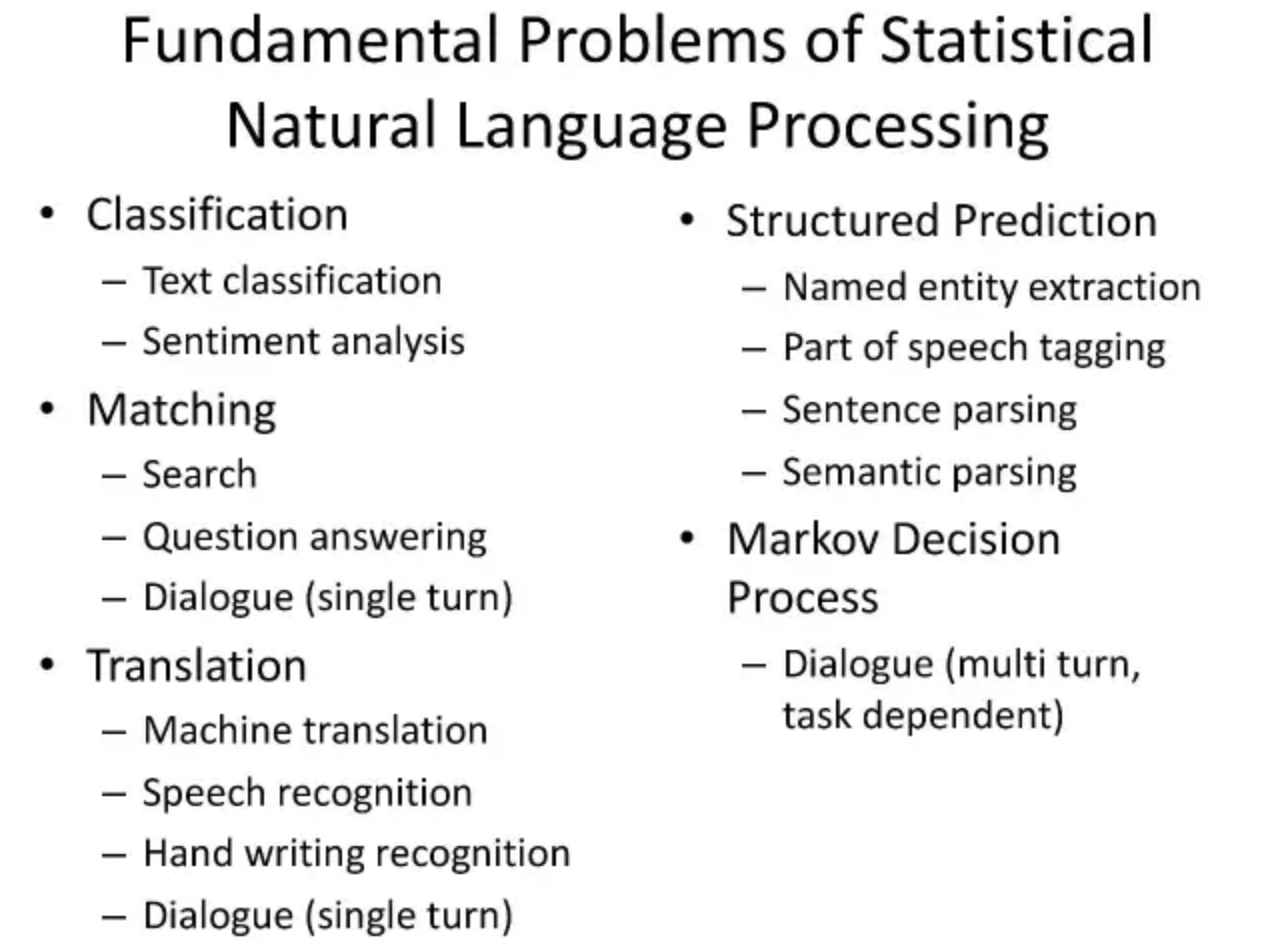

NLP实际上可以分为5个问题

- 分类

- 匹配

- 翻译

- 结构预测

- 马尔科夫决策过程

以此为划分,可以解决非常多的问题

Pipeline

NLP的pipeline基本是一样的

- 预处理

- 获取embedding

- 各种应用

文本预处理

预处理主要是为了处理成可以用处分析的形式

一般分为3步

- 噪音移除(一种可以用词袋,二者可以用正则表达式,枚举去除没用的单词比如is, the, a, am)

- 词典规范化(又包括stemming,词干提取,以及词性还原,lemmatization)这个可以用NLTK的包来实现。

- 对象标准化(用来处理在常见的词汇库不存在的单词,比如俚语,网络用语等,最简单的可以用字典枚举实现)

噪音移除

# Sample code to remove noisy words from a text

noise_list = ["is", "a", "this", "..."]

def _remove_noise(input_text):

words = input_text.split()

noise_free_words = [word for word in words if word not in noise_list]

noise_free_text = " ".join(noise_free_words)

return noise_free_text

_remove_noise("this is a sample text")

>>> "sample text"

# Sample code to remove a regex pattern

import re

def _remove_regex(input_text, regex_pattern):

urls = re.finditer(regex_pattern, input_text)

for i in urls:

input_text = re.sub(i.group().strip(), '', input_text)

return input_text

regex_pattern = "#[\w]*"

_remove_regex("remove this #hashtag from analytics vidhya", regex_pattern)

>>> "remove this from analytics vidhya"

词汇标准化

from nltk.stem.wordnet import WordNetLemmatizer

lem = WordNetLemmatizer()

from nltk.stem.porter import PorterStemmer

stem = PorterStemmer()

word = "multiplying"

lem.lemmatize(word, "v")

>> "multiply"

stem.stem(word)

>> "multipli"

目标标准化

lookup_dict = {'rt':'Retweet', 'dm':'direct message', "awsm" : "awesome", "luv" :"love", "..."}

def _lookup_words(input_text):

words = input_text.split()

new_words = []

for word in words:

if word.lower() in lookup_dict:

word = lookup_dict[word.lower()]

new_words.append(word) new_text = " ".join(new_words)

return new_text

_lookup_words("RT this is a retweeted tweet by Shivam Bansal")

>> "Retweet this is a retweeted tweet by Shivam Bansal"

文本 embedding

常用的embedding包括word2vec,GloVe

主要应用

- 计算相似度

- 寻找相似词

- 信息检索

- 作为 SVM/LSTM 等模型的输入

- 中文分词

- 命名体识别

- 句子表示

- 情感分析

文档表示

- 文档主题判别

Text Classification

- Text Summarization – Given a text article or paragraph, summarize it automatically to produce most important and relevant sentences in order.

- Machine Translation – Automatically translate text from one human language to another by taking care of grammar, semantics and information about the real world, etc.

- Natural Language Generation and Understanding – Convert information from computer databases or semantic intents into readable human language is called language generation. Converting chunks of text into more logical structures that are easier for computer programs to manipulate is called language understanding.

- Optical Character Recognition – Given an image representing printed text, determine the corresponding text.

- Document to Information – This involves parsing of textual data present in documents (websites, files, pdfs and images) to analyzable and clean format.

常见的NLP处理库

- Scikit-learn: Machine learning in Python

- Natural Language Toolkit (NLTK): The complete toolkit for all NLP techniques.

- Pattern – A web mining module for the with tools for NLP and machine learning.

- TextBlob – Easy to use nl p tools API, built on top of NLTK and Pattern.

- spaCy – Industrial strength N LP with Python and Cython.

- Gensim – Topic Modelling for Humans

- Stanford Core NLP – NLP services and packages by Stanford NLP Group.